Research

Would you trust a Faulty Robot?

A Human Robot Interaction study investigating trust dynamics between

humans and Incorrect robots

Spring 2024, 16-467: Human Robot Interaction

Exploring how humans respond to suggestions from a robot, especially when they are wrong.

Research Context and Motivation

This project was conducted as part of a Human-Robot Interaction course with Henny Admony at Carnegie Mellon University's Robotics Institute. The research question was straight forward yet significant:

How do humans behave when a robot provides an incorrect answer? Do they push back, or follow along?

The study was inspired by and serves as a conceptual replication of the work by Maha Salem et al., titled Would You Trust a (Faulty) Robot? Effects of Error, Task Type and Personality on Human-Robot Cooperation and Trust (IEEE). Their findings indicated that while a robot's errors influenced perceptions of trust and reliability, it did not necessarily prevent people from following its suggestions. Our goal was to explore these effects in a new task context, and examine how explanation and error together shape trust and cooperation.

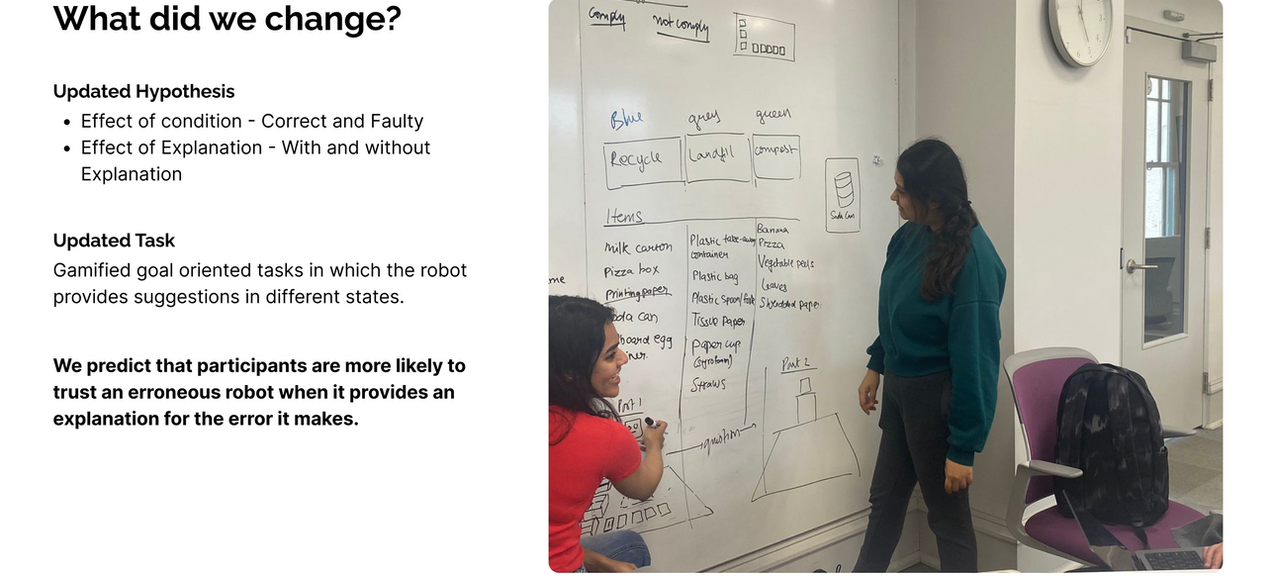

Designing the Experimental Framework

We began with a series of brainstorming sessions to identify a task that was both familiar and slightly ambiguous. After considering scenarios such as cooking, travel planning, and storytelling, we settled on waste sorting, an everyday activity that most people understand, but often feel uncertain about.This task provided the ideal context to observe how participants respond to confident but potentially incorrect robotic suggestions. Thus, the recycling game was born.

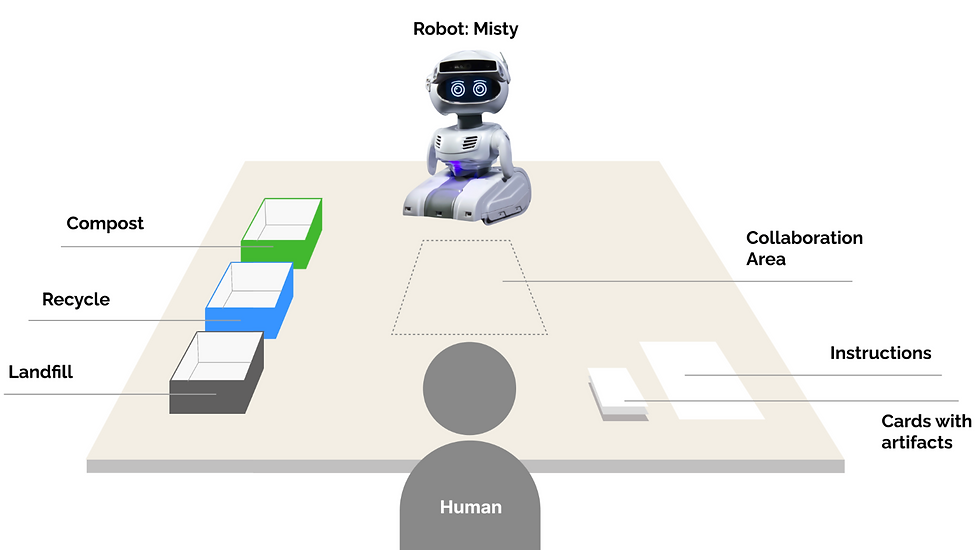

Participants were asked to sort everyday waste items (e.g., banana peels, plastic forks, tissue paper) into one of three bins: recycling, compost, or landfill. Misty, an educational assistive robot, offered guidance on each item.

Ideating the design procedure for the study

Study Design : Recycling Game

Research Question and Hypothesis

We formulated two primary hypotheses:

-

Correct robots are more trusted.

Participants would be more likely to trust a robot that consistently provides correct answers.

-

Explanations increase believability, even when incorrect.

A faulty robot that offers explanations would be more trusted than a faulty robot that provides no reasoning.

These hypotheses sought to uncover the mechanisms by which humans assign credibility to robotic collaborators.

Experimental Task Description

The card-sorting game featured printed images of common waste items. This was a between-subjects study, with 5 participants assigned to each of the four experimental conditions. Each participant placed cards one at a time in front of Misty, who then responded with a bin suggestion either accompanied by an explanation or not, depending on the assigned condition.

We tested four robot behaviors:

-

✅ Correct + Explanation

-

✅ Correct + No Explanation

-

❌ Faulty + Explanation

-

❌ Faulty + No Explanation

Research Conditions - Independent Variables

Examples of faulty explanations given by Misty for Condition 1 :

-

"Banana peels should go into recycling because recent technology can turn banana waste into bioplastics."

-

"Cardboard belongs in recycling because toilet paper rolls are also made from recycled material."

Data Collection and Evaluation Metrics

Our data collection consisted of:

-

Behavioral observation, tracking how often participants complied with Misty's suggestions

-

Surveys, assessing trust, helpfulness, and perceived reliability

-

Post-task interviews, exploring participant reasoning and reflections

Data Collection - Post workshop Interviews and surveys

Behavioral Typologies and Participant Responses

Participants' behavior clustered into three categories:

-

🧠 Rational Thinkers

Carefully evaluated Misty's suggestions using their own knowledge. Often from design backgrounds.

-

🤖 Autopilots

Followed Misty's guidance without critical analysis, often prioritizing game performance.

-

🔍 Skeptics

Actively questioned Misty, particularly when inconsistencies emerged. Common among CS and robotics students.

Participants contradicted themselves. Although many claimed they would not trust a faulty robot, their actions indicated otherwise.When debriefed, some participants laughed, others justified their decisions, and a few admitted second-guessing Misty's advice but choosing to follow it regardless.

Analysis of Trust Formation and Breakdown Hypotheses

-

Knowledge gaps encouraged compliance. When uncertain, participants often relied on Misty, even when she was wrong.

-

False explanations increased trust. Explanations, even if inaccurate, added credibility.

-

Participants contradicted themselves. Although many claimed they would not trust a faulty robot, their actions indicated otherwise.

-

Cognitive dissonance was evident. When debriefed, some participants laughed, others justified their decisions, and a few admitted second-guessing Misty's advice but choosing to follow it regardless.

-

Preexisting biases influenced behavior. Those already skeptical of robots remained cautious. Others were more accepting.

-

Trust evolved over time. Some participants initially trusted Misty, but their confidence declined as errors accumulated.

Broader Reflections on Human Perception of AI Hypotheses

This study highlights a broader implication for how we interact with AI systems in daily life. When people lack subject matter knowledge, they tend to default to trusting confident, well-articulated responses,even when those responses are incorrect. This has important consequences for how we design AI systems in high-stakes areas such as health, finance, or sustainability.

We must ask : What happens when AI confidently provides misinformation in contexts where users do not have the expertise to question it ? How can we design systems that invite healthy skepticism without eroding user trust?

The challenge is not just making AI accurate, it’s making AI trustworthy, transparent, and accountable.

Driven by Creativity

Grounded in Research